Why Machines Learn: The Elegant Maths Behind Modern AI by Anil Ananthaswamy

Do you love maths? Like love maths deeply, equation heavy maths? Like this type of maths?

No? Really? Why not?

Ok, so this may, bear with me, may not be the book for you. As this is maths, well, heavy. You see the maths behind machine learning (ok, from now on, I’m not going to write anything full length, as I’m pretty certain I’ve turned anyone off who doesn’t know what the acronyms are already) is insanely hard in comparison to 2 + 2 = 5.

But, (I use but a lot in my language and writing, this has come to the fore as I’ve written more. Live with it) keep with the review please, as I have an out, and a reason why you should still read this book.

Flyability

Yeah. No. As flyable as a brick. Don’t attempt to read this on a plane. You need this:

And a nice stereo, and I’m sure you can see the hint of a pair of B&W 706 S3 on the left…that’s what you need to read this book. Space. Peace. Reflection. Moments to go. Eh. What did that mean. Was that really the matrix multiplication I understood from school?

Quotes

Oh, I just want to put this in because anything by reductio ad absurdum is awesome:

‘Cybenko’s proof by contradiction starts with the assumption that a neural network with one arbitrarily large hidden layer cannot reach all points in the vector space of functions, meaning it cannot approximate all functions. He then shows that the assumption leads to a contradiction and, hence, is wrong. It wasn’t a proof by construction, in that Cybenko did not prove some assertion. Rather, it was classic reductio ad absurdum. He started by assuming that some proposition was true and ended up showing that the proposition was false. “I ended up with a contradiction,” Cybenko said. “The proof was not constructive. It was an existence [proof].”’1

Ah ha moments

Now I did intimate that the Gift of Fear was a very good pairing for this book. So look at this:

‘Before Alhazen, humanity’s attempts at understanding vision—our ability to see and perceive the world around us—were, in hindsight, very strange. One idea was known as the “intromission” theory, which essentially posited that we see an object because bits of matter of some form emanate from that object and enter our eyes: “Material replicas issue in all directions from visible bodies and enter the eye of an observer to produce visual sensation.” Some believed that those bits of matter were atoms. “The essential feature of this theory is that the atoms streaming in various directions from a particular object form coherent units—films or simulacra—which communicate the shape and color of the object to the soul of an observer; encountering the simulacrum of an object is, as far as the soul is concerned, equivalent to encountering the object itself.”’2

Now do you see how a book that delves deep into fear, is the perfect red wine pairing in this book? How spooky it is? Literally how the de Becker book talks about perception and how a book about machine learning does the same thing.

If you don’t understand how ‘we’ perceive, it is very hard, nay, impossible, to properly conceive of how computers perceive. Yet. The singular problem I have with this view is very simple. We, as a species, are deeply arrogant and hubristic. We can’t conceive that the method of perception of an alien, the computer, is not the same as ours. It links to the old question, of how do you know the aliens aren’t here already?

All we do is see things through the lens of our own lives, and thoughts, and don’t have the spine to see that maybe there are alternate ways that things happen. So where does this get us. Let us delve more into the book.

As I’ve stated, we are now at a point in this review that I’m assuming you know shit. If not. Oops. Life is hard. So lets get into this:

‘Note that if you are given a Hopfield network with some stored memories, all you have access to are the weights of the network. You really don’t know what stored memories are represented by the weight matrix. So, it’s pretty amazing that when given the perturbed image shown above, our Hopfield network dynamically descends to some energy minimum. If you were to read off the outputs at this stage and convert that into an image, you would retrieve some stored memory.’3

Yet, doesn’t this sound a bit familiar. If you take a brain out of its skull and sit it on a bench, its a bit hard to figure out how it ever thought, reacted to anything. So here we have ‘weights’ which is how the machine model ‘thought’. But we really don’t have a clue as to how it thought. Deeply familiar. We’ve sorted of created an alien species, that thinks in a way we don’t understand, so is this good or bad? I’m not sure.

As you dive deeper into the book then the theory gets harder, the maths get tricker and weirder and you start to diverge from maths into what is effectively human psychology:

‘While these bespoke models are targeting specific systems in the brain, LLMs break the mold. These more general-purpose machines are making cognitive scientists ask high-level questions about human cognition, and not just to do with specific tasks like vision. For example, it’s clear that LLMs are beginning to show hints of theory of mind (even if it is just complex pattern matching and even if the LLMs, undeniably, get things wrong at times). Can they help us understand this aspect of human cognition? Not quite. At least, not yet. But cognitive scientists, even if they aren’t convinced of an LLM’s prowess in this arena, are nonetheless intrigued.’4

All I’d say at this point is lets refer back to Monster. How does a dog learn? It, seems to learn by experimental feedback. Things happen. It remembers. And as such behaves differently from that emotional response onwards.

Let me give you an example. There is an alley we walk through, on the right hand side a big bouncy boxer called Frank lives. He’s sadly managed to have a go at Monster. So even though he is safely behind a fence, mon mon gets all freaked out and wants to get out of that alley real quick. That’s a one off event that has scared mon mon for life. He will always respond that way.

Why I bring that up is quite simple; life, learning is actually about feedback. Its about changing your corpus of knowledge as you experience things and changing your future behaviour. How does an LLM or any form of ML have feedback? You can perhaps mask it by an agent that learns how you have reacted in the past, but that is an input change rather than a memory and emotion change. It is not, yet, capable of going, oh right got that wrong. Lets ditch all that and start again. Right now an LLM is fixed upon its training data. That is not a great starting point.

Let me give you an example. Sort of reductio ad absurdum. Imagine an LLM that existed in ancient times. One that existed right before Galileo upset the Catholic Church:

‘James Burke says, “You are what you know.” He explains that fifteenth-century Europeans knew that everything in the sky rotated around the earth. Then Galileo’s telescope changed that truth.’

How would you retrain an LLM to suddenly ditch all that it knew about how the world was the center of the universe and then believe it was orbiting the sun instead? And yet keep what was still right without retraining the whole damn thing? There seems to be many a flaw in what we are perceiving as the next best thing.

Other ah moments.

At the same time these moments just caressed my brain and went, oh, so that’s what all that A-Level maths was about:

‘Every toss of the coin is a sample from the underlying distribution. Here’s an example of what happens when we sample a 100,000 times. (Thanks to something called the square root law, the counts of heads and tails will differ by a value that’s on the order of the square root of the total number of trials; in this case, it will be on the order of the square root of 100,000, or about 316.)5

If you know the maths of many many things in life, then you can with high certainty predicate what is happening. Yet in most of life you don’t have a mental track record of 100,000 coin tosses, so what you have is feels. Memories are atrocious, fallible, so what we have is the wonderful place that machines can be better than humans. They can remember numbers way better than humans, so on that respect can predict way better than humans.

An interesting point:

‘Let’s start with window glass. One method for making such glass is to start with the raw materials—usually silica (sand), soda ash, and limestone, with silica being the primary component. The mixture is melted to form molten glass and then poured into a “float bath.” The bath gives plate glass its flatness and helps cool the molten material from temperatures of over 1,000°C down to about 600°C. This flat material is further “annealed,” a process that releases any accumulated stresses in the glass. The key, for our purposes, is that the resulting glass is neither a solid with an ordered crystalline structure nor a liquid. Instead, it’s an amorphous solid where the material’s atoms and molecules don’t conform to the regularity of a crystal lattice.’6

What the hell does that mean, you may ask. Well go to a really old building. The UK is full of them and look closely at the glass windows, you may just notice the bottoms are a touch thicker than the tops. The glass has been flowing for hundreds of years. It basically isn’t what you think it is.

Five Stars

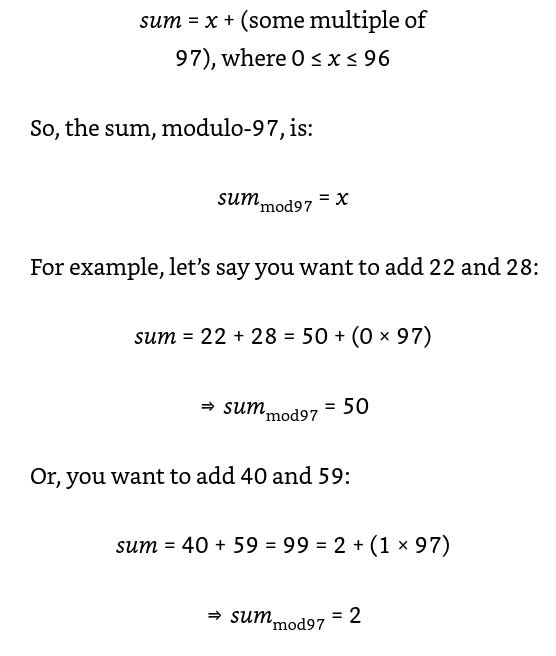

So I will give this book five stars and one star. Go with me. You have such splendid maths as:

Which if you are a maths, something, genius, I guess, is fantastic, and beautiful, and understandable, so the book gets a five stars. As if you don’t get the maths, then there is something you are sort of missing with LLMs, and all of ML. You have to understand the machine ‘psychology’ of the intelligence to make it make sense. You have to at some level know how this thing is thinking to understand it. You don’t need to know how a human thinks, as you have a certain level of Venn diagram overlap, but you need to, as a new capability, understand how these infernal machines work.

If you don’t you are stuffed in the new tech reality.

At the same time the human stories such as:

‘Initially, Guyon and Vapnik argued over whether the kernel trick was important. Boser, meanwhile, was more practical. “This was a very simple change to the code. So, I just implemented it, while [they] were still arguing,”10 Boser told me. Guyon then showed Vapnik a footnote from the Duda and Hart book on pattern classification. It referred to the kernel trick and to the work of mathematicians Richard Courant and David Hilbert, giants in their fields. According to Guyon, this convinced Vapnik. “He said, ‘Oh, wow, this is something big,’” Guyon said.’7

just get subsumed into the maths. I imagine Michael Lewis writing this book and just being amazed at the humanity and capability of humans to create such immense beauty. That would get a five star rating. Instead the humanity gets lost by the maths. There could be so much of a powerful story to explain the people. The emotions. Everything that makes life so. Yet, we get a bunch of things about mat mul. So it gets a one star instead.

Still read the book as you can skip some (me), most, all of the maths in the book and still get a good upgrade on how machines learn.

P. 300

P. 150

P. 267

P. 428

P. 108

P. 245-246

P. 236

Very intriguing. Regarding the Galilean LLM, I suspect that until you can posit ‘how would an LLM change its understanding of the way the world is?’ it would always be re-training. But then it’s a language model after all and not a general purpose AI.

Sorry but the pedant in me needs to point out that the glass is only thicker on the bottom because it was made that way (blown not float). Flow rate is approx 1nm per billion years.

ref: https://ceramics.onlinelibrary.wiley.com/doi/abs/10.1111/jace.15092